Opening RunPod and seeing a wall of templates can be confusing. There are official images, community builds, ComfyUI setups, Flux stacks, LLM templates, and serverless options. Most creators do not want to learn infrastructure. They just want a template that works, is affordable, and gets them from idea to output without CUDA errors.

This guide is for that.

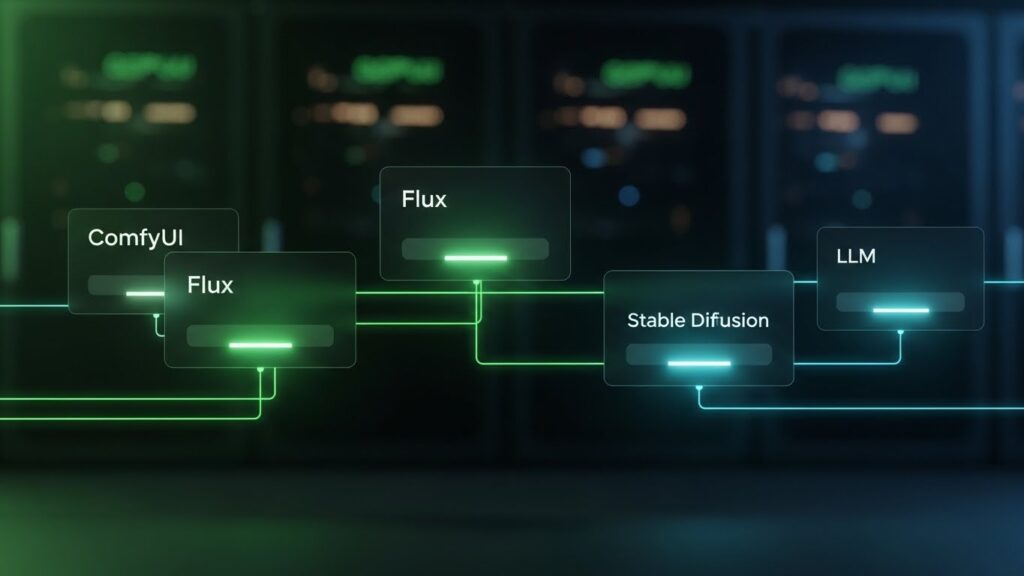

We will walk through the best RunPod templates for AI creators in 2025, including ComfyUI, Flux, Stable Diffusion, and LLM chatbots. You will see which templates fit image, video, and text workflows, which GPUs to choose, and when it makes sense to build your own template.

By the end, you will know which RunPod template to launch for your workflow and how to get your first render or chatbot online with minimum friction.

This article includes affiliate links. If you sign up through these links, I may earn a commission at no extra cost to you.

What Are RunPod Templates and Why Creators Use Them

Quick definition

A RunPod template is a pre configured GPU environment you can launch in a few clicks. Instead of installing CUDA, PyTorch, ComfyUI, Stable Diffusion, or an LLM stack yourself, you start from a ready made image that already has the core software installed.

Two main types:

- Official templates built or maintained by RunPod

- Community templates created by power users and shared in the template library

Both exist to save you setup time and reduce the chance of breaking your environment.

What a template includes

A template is more than a single container image. It usually includes:

- A container image with the main tools installed

- Suggested GPU and volume settings

- Default ports and startup commands so the web UI works on first launch

For creators this means less time copy pasting install commands and more time actually generating images, videos, or scripts.

Why templates matter for AI creators

Templates are especially useful if you:

- Do not want to maintain drivers or OS updates

- Need consistent environments across projects or clients

- Want to test newer models like Flux or Wan without touching a local rig

You treat infrastructure as a commodity. Pick a template, choose a GPU, and get back to creating.

How To Choose The Right RunPod Template

Before you scroll the list, answer three questions.

1. What is your primary goal

Most AI creators fall into one or more of these buckets:

- Image generation

- Stable Diffusion, SDXL, ComfyUI workflows, thumbnails, AI influencers

- Video or motion

- Image sequences, motion models like Wan, early image to video work

- Text and chat

- LLM chatbots, scriptwriters, idea generators, caption tools

Once you know the output, the shortlist of templates gets smaller.

2. How much VRAM do you really need

In simple terms:

- Standard SD and SDXL can run on modest GPUs

- Flux and heavier video models eat more VRAM and like higher end cards

- LLMs need VRAM for speed and larger context windows

Practical rule:

- Learning SD or ComfyUI only: start on a mid range GPU

- Serious Flux portraits, large models, or LLM plus image stacks: use a higher VRAM tier

You can always start smaller, watch for out of memory errors, and scale up.

3. Official vs community vs custom

Most people move through three stages:

- Official templates

- Stable and lean

- Ideal for first deployments

- Community templates

- Add features like ComfyUI Manager, pre loaded models, and extra tools

- Great for moving fast once you know what you want

- Custom templates

- Start from a template you like, install your favorite nodes and models, then save as your own

- Best once your workflow is stable and you are tired of repeating setup

Best Official RunPod Templates For AI Creators

Official templates are the safest way to start. They are maintained, documented, and usually minimal.

PyTorch or JupyterLab for experimentation

These templates are good if you like notebooks or code heavy work. They are ideal for:

- Training experiments

- Prototyping mixed workflows

- Combining model calls inside a single notebook

You get a clean stack without being locked into a GUI.

Stable Diffusion Web UI template

For classic image generation, an official Stable Diffusion Web UI template is hard to beat:

- Familiar interface if you have used Automatic1111 style UIs

- Quick prompt and sampler controls

- Solid choice for general image work and client previews

If you are not ready for node based systems like ComfyUI, start here.

Official LLM inference templates

LLM inference templates expose models through a web UI or API. They shine when you:

- Need a chatbot or script assistant

- Want to generate YouTube scripts, hooks, or social captions

- Prefer controlling an LLM on your own GPU pod

For many creators this becomes the permanent writing assistant next to an image pod.

Best Community ComfyUI Templates On RunPod

ComfyUI is where many image focused creators end up. It is flexible, node based, and plays well with modern models. Community templates let you skip the heavy install.

Lightweight ComfyUI plus Manager

A lightweight ComfyUI template with ComfyUI Manager is usually the best starting point:

- Minimal bloat and smaller image size

- ComfyUI Manager lets you install new nodes and extensions in a few clicks

- Lower storage cost because you only add what you need

Pick this if you want control over which models and nodes live in your environment.

ComfyUI plus Jupyter all in one

Some templates bundle ComfyUI, Jupyter, and a set of pre loaded SD and SDXL models:

- Convenient studio in a single pod

- Nice if you want to mix GUI workflows with notebook tweaks

- Helpful when you do not want to wait for large models to download on first run

You trade some storage for speed and convenience.

Trainer style templates with Kohya and more

Other templates bundle:

- ComfyUI

- Automatic1111 style UI

- Training tools like Kohya_ss

These are aimed at creators who want to train LoRAs, fine tune models, or build branded influencers. One pod gives you training, inference, and experimentation.

Pros and cons of community templates

Pros:

- Save hours of setup

- Often include best practice configs and quality of life tweaks

- Easy to find in the template library

Cons:

- Can become outdated if the maintainer stops updating

- May ship extra tools you will never use, which increases size

If you rely on one template heavily, keep backups of your workflows and key models.

Best Flux Templates For High End Image And Video

Flux models are popular for photoreal portraits, AI influencers, and polished visuals. They also demand more VRAM, so a good template matters.

ComfyUI with Flux 1 dev or Flux 2

Look for community templates that explicitly mention ComfyUI with Flux 1 dev or Flux 2 pre installed. The best ones usually include:

- ComfyUI with working Flux workflows

- ComfyUI Manager for easy node and model handling

- Recommended GPU and VRAM notes

This is the straight path if your goal is high quality portraits, brand characters, or influencer style content. Once running, you can plug in LoRAs, face swap nodes, and upscalers.

Flux Kontext templates

If you work on storytelling, carousels, or sets of related images, templates built around Flux Kontext can help:

- They allow workflows that consider multiple inputs or image history

- Style and identity can stay consistent across shots

- Strong for narrative content or multi panel posts

They are more advanced but powerful once you are comfortable with ComfyUI basics.

Serverless ComfyUI plus Flux endpoints

For developers, RunPod serverless options let you deploy Flux workflows as API endpoints instead of a GUI:

- Good for automated thumbnail pipelines

- Easy to integrate into web apps or bots

- Great for batch jobs without logging in every time

You work more with code, but you gain automation and scale.

Templates For LLM Chatbots, Scriptwriting And Assistants

Visuals are only half the work. Many creators need solid text too.

Oobabooga Text Generation Web UI

Text Generation Web UI (often called Oobabooga) templates give you:

- A chat style interface for LLMs

- Options for different models and sampling settings

- Fine control over style and tone after tuning

It is perfect if you want a private scriptwriter that runs on your own pod.

Prebuilt LLM inference templates

LLM inference templates focus on programmatic access:

- You get a running model with a clean endpoint

- You can wire this into small tools that generate hooks, descriptions, or chat flows

- Easy to pair with an image pod in a simple stack

A common pattern is: use the LLM pod to outline scenes and prompts, then send those prompts to a ComfyUI or Flux pod.

When to mix LLM and image pods

A simple two pod stack covers most content workflows:

- Pod 1: LLM for ideas, scripts, and captions

- Pod 2: ComfyUI or Flux for visuals

You do not need one giant template that does everything. Two focused pods are easier to manage and upgrade.

Cost And Performance: Which Templates Save Money

Templates only help if they are affordable enough to use often. Picking the right GPU tier is where you save or burn money.

Typical GPU tiers

Think in three levels:

- Budget or test

- For basic SD and small LLMs

- Good for learning and light experiments

- Balanced mid tier

- Handles SDXL, ComfyUI workflows, and many Flux setups

- Sweet spot for most solo creators

- High end or pro

- For large LLMs and heavy image or video tasks

- Makes sense when you run paid services or high volume jobs

If you mostly make still images, budget and mid tier are usually enough. Heavy training or multi model pipelines justify higher tiers.

Template cost comparison

You can drop a table like this into the article and update GPU names and prices with your own tests:

| Template type | GPU tier | Workload | Cost profile | Best for |

|---|---|---|---|---|

| Stable Diffusion Web UI | Budget / Mid | Standard SD and SDXL images | Low | Beginners and quick samples |

| Lightweight ComfyUI | Mid | SDXL, light Flux, LoRAs | Medium | Flexible image workflows |

| ComfyUI with Flux | Mid / High | High end portraits and influencers | Medium / High | Serious image creators and client work |

| Oobabooga or LLM inference | Mid / High | Scripts and chatbots | Medium | Text heavy workflows |

| Training templates with Kohya | High | LoRA and custom model training | High | Advanced creators and training projects |

The goal is not exact numbers. The goal is to show relative cost so readers pick the right tier.

Simple ROI check vs buying a GPU

Cloud GPUs make sense when:

- Your usage is part time or project based

- You cannot justify a big upfront GPU purchase

- You want the option to scale up for peak demand

Estimate your hours per month on a GPU, multiply by an approximate hourly rate, and compare that to buying a local GPU spread over 12 to 24 months plus power and your time. Many solo creators come out ahead with a RunPod first approach.

How To Launch Your First RunPod Template

Once you know which template fits, getting your first pod running is straight forward.

Create an account and add credits

- Sign up for a RunPod account.

- Add a payment method and load some credits.

- If you want to support this guide and get started, you can sign up using my referral link (Minimum $5 bonus):

runpod.io

You do not need a large balance to begin. Start small and scale as you learn.

Find the template in the library

From the dashboard:

- Open the section that lists templates

- Search for terms like “ComfyUI”, “Stable Diffusion”, “Flux”, or “Text Generation Web UI”

- Open the template details and read the notes, GPU suggestions, and any warnings

This is where you confirm that the template matches your use case.

Configure GPU, volume and environment

On deploy:

- Choose a GPU tier that matches your needs and budget

- Set a persistent volume size big enough for models and workflows

- Add any required environment variables or startup commands mentioned in the template description

You can clone this configuration later and adjust as needed.

Connect and run your first workflow

After the pod starts:

- Use “Connect via HTTP” to open ComfyUI, Stable Diffusion, or Oobabooga

- Run a small test workflow to confirm everything is working

- Save workflows and key assets to the persistent volume

You now have a repeatable environment you can stop and restart when needed.

When To Build Your Own Custom RunPod Template

At some point you will get tired of repeating the same installs every time you launch a pod. That is your signal.

Signs you are ready for a custom template

You are probably ready if:

- You install the same ComfyUI nodes or extensions on every launch

- You rely on specific library versions

- You want teammates or clients to use the same environment

Public templates are great for exploration. Custom templates are for your “production” stack.

Basic workflow for your own template

- Start from an official or community template that is close to what you want

- Install your preferred models, nodes, and scripts

- Remove anything you do not need

- Use RunPod’s template save feature to capture this pod as your own reusable template

From then on you spin up new pods from your own image and skip setup.

Safety and maintenance

If you keep your own template:

- Maintain a short README with what is inside and versions you depend on

- Test after major CUDA or driver changes

- Back up important models and workflows outside the pod

That small bit of discipline prevents nasty surprises mid project.

FAQs About RunPod Templates For Creators

If you want to turn RunPod into a real production tool, the next step is simple: pick one template from this guide, launch a small GPU using your budget, and run a real project through it. The best template for you is the one that lets you ship work consistently.

Templates themselves are free. You only pay for GPU time, storage, and related resources while pods or volumes are in use.

RunPod focuses on data center GPUs. Most popular templates are built for NVIDIA and CUDA. Before launching, check which GPU types are available in your region and which ones the template supports.

Yes. You can start from a public template, deploy it, customize it, and then save it as a private template under your account. That way you get the benefits of the original plus your own tweaks.

Yes. You can start from a public template, deploy it, customize it, and then save it as a private template under your account. That way you get the benefits of the original plus your own tweaks.