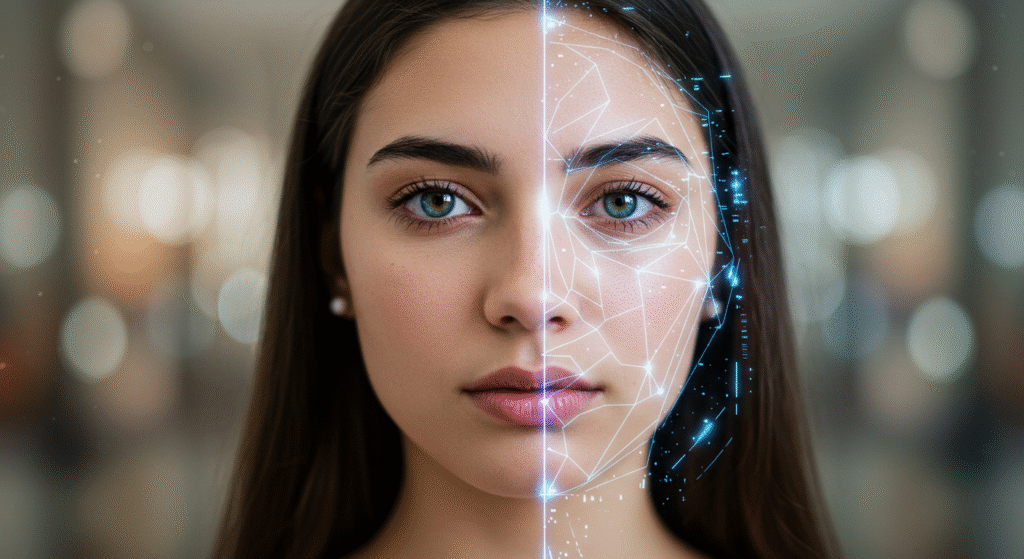

What if you woke up to a video of yourself online—doing or saying things you never did? Welcome to the AI Doppelgänger Dilemma, where deepfakes and synthetic media are changing the way we trust what we see and hear.

From viral celebrity deepfakes on TikTok to voice-cloned scam calls, synthetic media isn’t just a tech buzzword anymore. It’s everywhere, and it’s blurring the line between what’s real and what’s fake. Let’s break down how it works, why it matters, and what you need to know to protect yourself.

What Is the AI Doppelgänger Dilemma?

The AI Doppelgänger Dilemma is all about the rise of hyper-realistic digital copies—AI “doppelgängers”—that can mimic your face, your voice, and even your personality. Powered by tech like Generative Adversarial Networks (GANs) and tools like Synthesia, these doppelgängers can be used for everything from entertainment to fraud.

Synthetic media covers a lot:

- Deepfake videos (think viral fake Tom Cruise on TikTok)

- AI voice cloning for scam calls

- Virtual influencers who don’t actually exist

- Real-time digital twins for business meetings

With the tech getting better every year, the threat (and potential) is growing fast. Want to learn more about how AI is shaping digital content? Check out our Best Text-to-Video AI Tools for 2025 guide for a deep dive.

The Good Side: How Synthetic Media Helps

Let’s not get it twisted—synthetic media isn’t all bad. It’s already being used for:

- Entertainment: De-aging actors, “resurrecting” musicians for concerts, or putting virtual influencers like Lil Miquela in ad campaigns.

- Education: Bringing history to life with realistic recreations, or letting students practice languages with AI-powered avatars.

- Accessibility: AI voice tech helps people with disabilities communicate, and AI-generated descriptions make media more accessible for the visually impaired.

AI can even save money and speed up content creation for small businesses or indie creators.

The Dark Side: Risks and Real-World Consequences

But here’s where the AI Doppelgänger Dilemma gets sketchy.

1. Deepfake Fraud & Identity Theft

Scammers now use AI to clone voices and faces for next-level phishing. In 2024, a Florida woman lost $15,000 after hearing a fake “daughter” begging for help in a car crash call [Bitdefender, 2024]. Synthetic ID fraud alone hit $20 billion in losses in 2024—and the tools are so easy to use, literally anyone can do it.

2. Misinformation & Political Manipulation

Deepfake videos of politicians (like the fake surrender video of Ukraine’s President Zelenskyy) go viral in seconds, tricking millions and making it hard to trust any “proof” online. This isn’t just about drama—deepfakes can sway elections, fuel propaganda, and damage reputations.

3. Privacy Violations & Non-Consensual Content

AI “nudification” apps can strip clothes from images, while deepfake porn is spreading at an alarming rate, mostly targeting women and minors. Over 96% of all deepfake videos found online are pornographic [Stanford, 2024].

4. Erosion of Trust

If anyone can make a video of you doing or saying anything, how do you prove what’s real? Some experts warn that 90% of online content could be AI-generated by 2026 [The Living Library, 2024]. Trust in digital news, brands, and even your friends is at risk.

How to Navigate the AI Doppelgänger Dilemma

1. Tech That Fights Back

Detection tools are catching up. Intel’s FakeCatcher can spot deepfakes in real-time by tracking blood flow in faces. OpenAI and Google are working on watermarking AI-generated images and videos.

2. Clear Consent and Privacy Rules

Always check what you’re agreeing to online. Your selfies, audio, and video can all be used to train AI without you even knowing—unless you read the fine print.

3. Laws and Regulations

The EU AI Act is already forcing creators to label AI-generated content, and the U.S. has new rules for election deepfakes. China requires all synthetic media to be clearly marked. But regulations vary wildly, so don’t count on the law to save you everywhere.

4. Stay Skeptical and Informed

If something looks too wild or “viral” to be true, it probably is. Learn to spot deepfakes (look for weird eye blinks, odd shadows, or off timing). Sites like Sensity AI and Reality Defender are building deepfake detectors you can actually use.

The Future: Where Is This Going?

AI doppelgängers and synthetic media are only getting smarter and more common. There’s real value if it’s used right, but the risks aren’t going anywhere. Expect more tools to help verify what’s real, and more rules to protect your identity—but also, expect more bad actors to push the limits.

So, would you trust a video of yourself online ten years from now? Maybe—but only if you know where it came from.

Key Takeaways

- AI doppelgängers are changing the rules of trust online.

- Deepfakes and synthetic media make content more viral, but also more dangerous.

- Scams, fake news, and privacy risks are growing.

- Stay smart: Use tech tools, demand transparency, and double-check what you see.